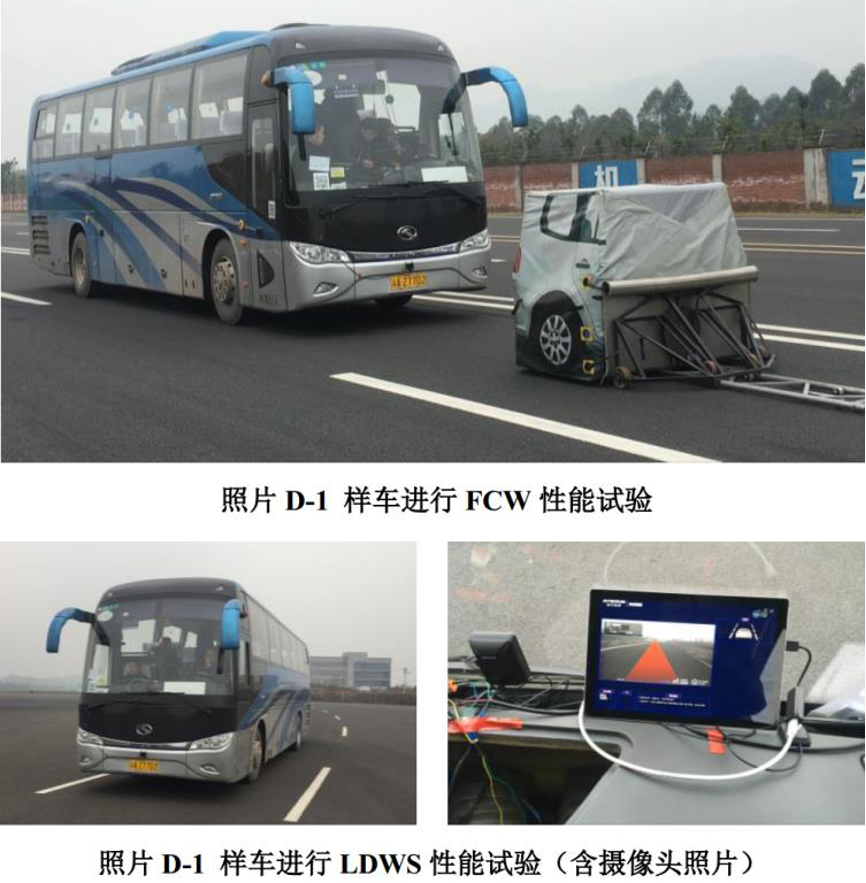

The Ministry of Transportation officially implemented the "Safety and Technical Conditions for Operating Buses (JT/T 1094-2016)" on April 1, 2017, which clearly requires that operating buses longer than 9 meters be retrofitted with lane departure warning systems (LDWS) and forward collision warning systems (FCWS) that comply with the provisions of JT/T 883 standard (hereinafter referred to as "883 standard"). ) and forward collision warning system (FCWS), and clearly stipulates a 13-month transition period. This means that LDWS and FCWS will officially become standard equipment for operating buses, and whether or not they can pass the "883 Standard" test becomes an important "threshold" for ADAS products to be accepted by commercial vehicle customers.

Recently, Autocruis got good news again, the company's FPGA-based embedded forward ADAS system successfully passed the JT/T883 test!

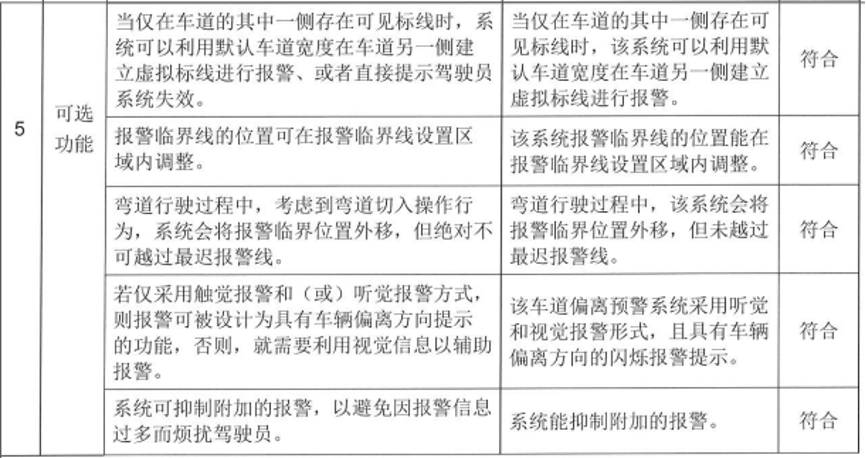

It is worth noting that in this test, the Autocruis ADAS system not only passed all the mandatory functional requirements (including basic requirements, general requirements, installation and use requirements, technical requirements, etc.), but also completed all the "optional features" tests that are not mandatory. According to the test organization, most ADAS companies will not "touch" these more demanding and challenging "optional features" tests!

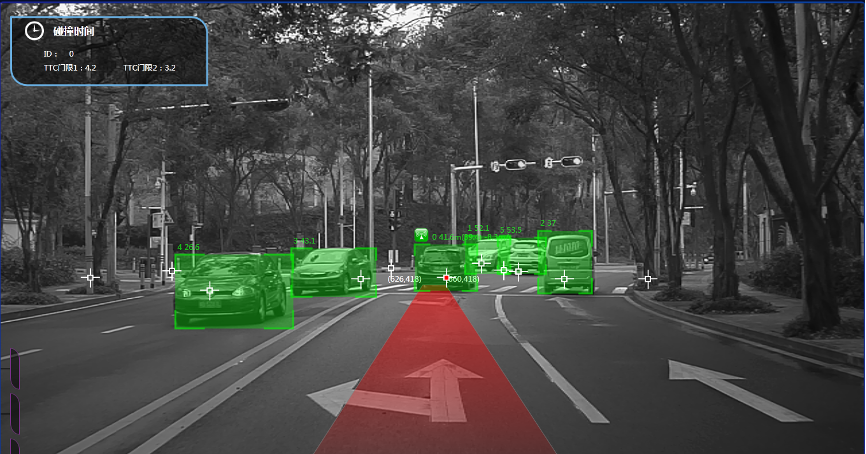

In the FCWS test, the system can accurately detect the vehicle in front and the left and right lane lines in real time, and the forward collision distance measurement error is less than 10cm, which is much higher than the official standard (<1m). Meanwhile, in LDWS test, when the lane line on one side of the road is not clear or there is no lane line, the system can use the visible marker on the other side of the lane to build a virtual lane line to provide a new reference marker for lane departure warning; when the vehicle enters a curve, the lane line detection is difficult, but the forward ADAS system can still quickly identify the curved lane lines with different curvature based on semantic segmentation technology to provide accurate curved lane line detection for the vehicle. Provide accurate curved lane line detection.

In addition, the ADAS system not only meets the JT/T 883 standard, but also supports the following core technologies (functions) in order to support the increasing demand for high performance of LKA, AEB and ACC in passenger cars:

Multi-road target detection

Autocruis ADAS system based on deep learning on the vehicle-grade FPGA platform also simultaneously develops core functions such as pedestrian collision warning (PCW) and traffic sign recognition (TSR). The system can achieve real-time and accurate detection of various road targets under various complex weather (sunny, cloudy, rain, snow, etc.) and road environments (urban, highway, rural), as it supports the detection of all types of vehicles (including cars, buses, trucks, irregular motor vehicles, shaped vehicles, special vehicles, etc.), pedestrians in various postures (standing, walking, squatting, etc.) and traffic signs (various traffic warnings, prohibitions, instructions, auxiliary signs and traffic lights, etc.).

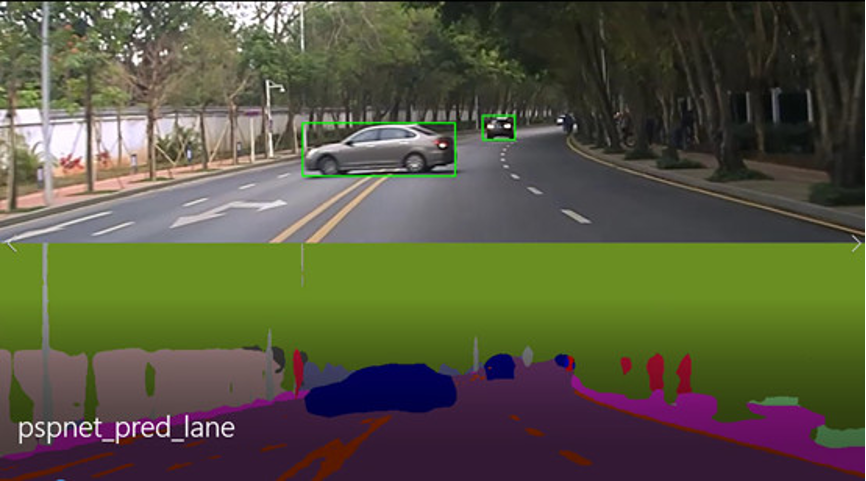

Semantic segmentation techniques

Image semantic segmentation is a machine to automatically segment and recognize the content in an image. Based on the deep learning algorithm represented by CNN, the Autocruis forward ADAS system performs pixel-level semantic segmentation of road targets, which can quickly identify road vehicles, lane lines, driveable areas and other targets, and provide the system with accurate lane line and obstacle information.

Through high polynomial fitting and efficient compression over PSPnet, the system can achieve 30fps road semantic segmentation, target detection and lane line detection with high detection rate, high accuracy and multi-lane recognition, and support lane line recognition and detection of straight, curved, multi-lane and unclear lane lines, providing reliable and safe driveable area recognition for vehicles.

Vision and Radar Fusion

Based on the mass-producible multi-sensor fusion technology, the vision and radar fusion solution of Autocruis collects road target data through vehicle-grade cameras and millimeter wave radar respectively, performs feature extraction, pattern recognition and comprehensive confidence weighting processing on the original data, and accurately correlates and matches the target list by category, with the accuracy calibration information for adaptive search tracking and matching, and integrates all sensor data of the same target to derive highly reliable and high-precision perception information.

At present, the vision and radar fusion scheme can support the maintenance of effective target libraries in single-target and multi-target scenarios, and realize the spatial and temporal synchronization of vision and radar by accurate coordinate conversion between radar coordinate system, 3D world coordinate system, camera coordinate system, image coordinate system and pixel coordinate system, etc., reduce the loss of fused data, optimize the distance, speed and accuracy of product detection, and complement each other's strengths and weaknesses, and greatly improve the recognition accuracy and environmental adaptability of the automotive perception system.